Openpilot ran on very limited android phones, notably Leeco pro 3, and some one plus phones. We changed that with flowpilot that could now run on most snapdragon phones. Now it was time to expand further, non snapdragon phones. We must be joking ? NO.

This is a one plus nord ce2 running on a MediaTek Dimensity 900. Soon after, other users [1], [2] also successfully deployed flowpilot on their mediatek devices. The ride to get here has been quiet bumpy.

Technical Details

flowpilot can technically run on any device on cpu. If you have a powerful enough cpu, you are good to go. But problem comes when you need to lower the costs and deploy flowpilot on edge devices like androids and jetsons. modeld uses gpu, if present to offload vision model execution to gpu. A gpu efficiently runs the neural network at highspeeds and at fraction of power than whats needed by the cpu to run the model. A user tried running on cpu, the results are horrible. almost 300 ms to process a single frame. Ideally, it should be less than 50 ms.

SNPE

The SNPE (Snapdragon neural processing engine) is a high performance neural network enginge by qualcomm that is restricted to run only on snapdragon devices. SNPE uses OpenCL as it’s backend for gpu execution. Most mordern phones supports OpenCL so technically snpe must work on non-snapdragon devices too right ? No,They probably have this condition in their code.

if (chipset != “Snapdragon“){

exit();

} Mediatek’s neuropilot joke

Like snpe, mediatek also has their own neural network sdk named neuropilot. Landing on their neuropilot homepage, they ask you to send them an email in which you have to fill details regarding why you want to access the sdk. This must have been the tacticts of one of those MBA morons. But ok, we anyways sent the email just to recieve this in response.

Pathetic. We tried reaching out to mediatek people on linkedin with no luck. Dumping neuropilot forever, period.

Problem with other nn runners

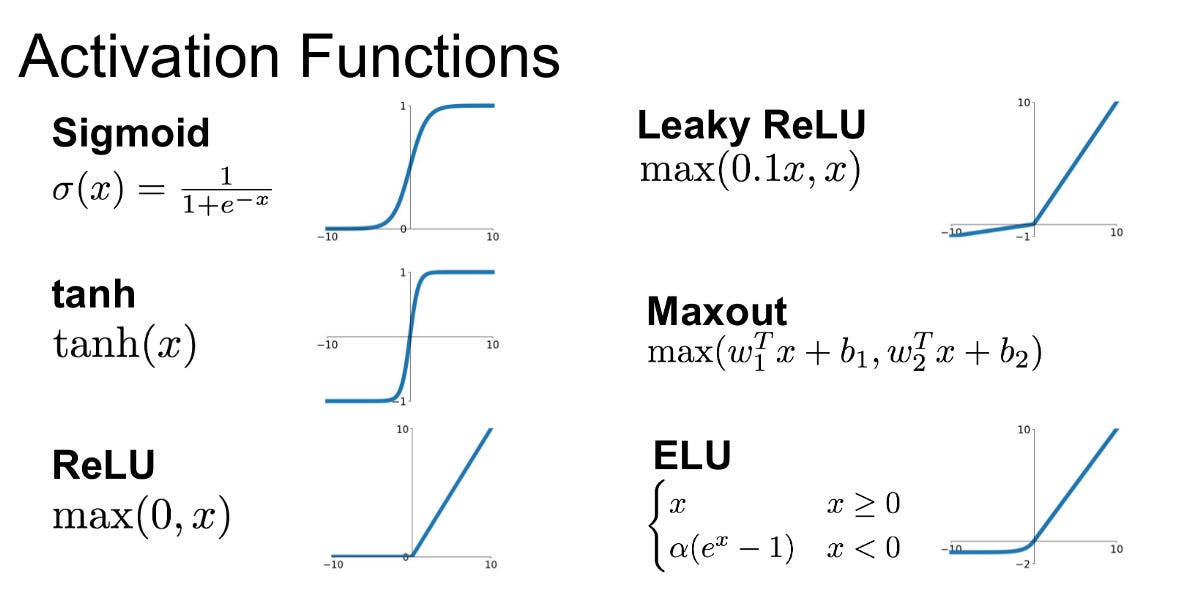

Other than these we tried other opensource netwrok runners. The supercombo model uses layers that most of the netwrok runners don’t support on gpu. First we tried ncnn by tencent. It’s really well made. Wrote a small script to run benchmark the model on gpu, just to find out that ELU layer is unsupported on gpu. When a layer is not supported on gpu, the data gets copied back to cpu, processed for that layer on cpu and then moved back to gpu. This back and forth movement of data on cpu and gpu takes significant time which could sum up to taking even more time than running model on cpu alone.

The first thought that came to mind was, can ELU be replaced by combination of other layers that are supported on gpu ?

Looking at the formulas a bit, we came up with a formula that mimics elu only using the layers that were supported on gpu.

elu = relu(input) + alpha*(1.0 / sigmoid(relu(input) - input) - 2)

or

elu = relu(input) - alpha*relu((1-exp(input)))

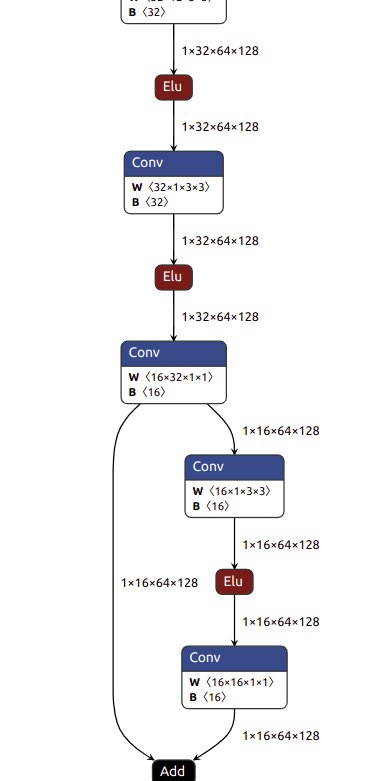

This original graph:

Was replaced by:

Now every layer is supported on gpu. I quickly loaded this model up and the predictions seemed to exactly matched with original model !

But all hopes were crushed when it performed at half the speed the original model. The reason is that there were too many ELU layers in the model which lead to too much extra branches causing poor performance.

TNN

After a lot of searching I finally came across this library: TNN. It supported all the layers out of the box. Benchmarked the model, it finally worked with good performance. There was no java api, so we wrote one JNI layer ourselves.

Ran well on pc with both cpu and gpu (openCL backend). Cross-compiled this with android NDK, ran this on a phone and pheww, everthing finally worked.

Now the only requirement for a phone to run flowpilot is openCL, which most android phones support (except some pixel phones, those brilliant engineers shipped a broken openCL).

Next up

Vulkan is gaining a lot of traction and many edge devices are getting shipped with vulkan support. Implementing some layers on vulkan can make it possible to leverage vulkan.